Updating the Shared File

Now we can update the file in the Shared project. We can put types and methods in this file that we know will be used in more than one other project. For our case, we can use the model input and output schemas.

type SalaryInput = {

YearsExperience: float32

Salary: float32

}

[<CLIMutable>]

type SalaryPrediction = {

[<ColumnName("Score")>]

PredictedSalary: float32

}

The SalaryInput class has two properties that are both of type float32. The SalaryPrediction class is special where we need to put the CLIMutable attribute on it. That has one property that's also of type float32. This property has the ColumnName attribute on it to map to the output column from the ML.NET model.

There's one other type we can add to our shared file. We can create an interface that has a method to get our predictions that can be called from the client to the server.

type ISalaryPrediction = { getSalaryPrediction: float32 -> Async<string> }

In this type, we create a method signature called getSalaryPrediction which takes in a paramter of type float32 and it returns a type of Async of string. So this method is asynchornous and will return a string result.

Updating the Server

Next, we can update our server file. This file contains the code to run the web server and any other methods that we may need to call from the client.

To run the web app you have the following code:

let webApp =

Remoting.createApi()

|> Remoting.withRouteBuilder Route.builder

|> Remoting.fromValue predictionApi

|> Remoting.buildHttpHandler

let app =

application {

url "http://0.0.0.0:8085"

use_router webApp

memory_cache

use_static "public"

use_gzip

}

run app

The app variable creates an application instance and sets some properties of the web app, such as what the URL is, what router to use, and to use GZip compression. You can also add items such as using OAuth, set logging, or enable CORS.

The webApp variable creates the API and builds the routing. Both of these are based on the predictionApi variable which is based off the ISalaryPrediction type we defined in the shared file.

let predictionApi = { getSalaryPrediction =

fun yearsOfExperience -> async {

let prediction = prediction.PredictSalary yearsOfExperience

match prediction with

| p when p.PredictedSalary > 0.0f -> return p.PredictedSalary.ToString("C")

| _ -> return "0"

} }

The API has the one method we defined in the interface - getSalaryPrediction. This is where we implement that interface method. It takes in a variable, yearsOfExperience, and it runs an async method defined by the async keyword. In the brackets is what it should run.

All we are running in there is to use a prediction variable to call the PredictSalary method on it and pass in the years of experience variable to it. With the value from that we do a match expression and if the PredictedSalary property is greater than 0 we return that property formatted as a currency. If it is 0 or below, we just return the string "0".

But where did the prediction variable come from? Just above the API implementation, a new Prediction type is created.

type Prediction () =

let context = MLContext()

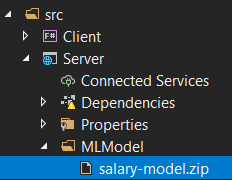

let (model, _) = context.Model.Load("./MLModel/salary-model.zip")

let predictionEngine = context.Model.CreatePredictionEngine<SalaryInput, SalaryPrediction>(model)

member __.PredictSalary yearsOfExperience =

let predictedSalary = predictionEngine.Predict { YearsExperience = yearsOfExperience; Salary = 0.0f }

predictedSalary

This creates the instance of the MLContext. It also loads in the model file, and creates a PredictionEngine instance from the model. Remember the SalaryInput and SalaryPrediction types are from the shared project. And notice that, when we load from the model, it returns a tuple. The first value returns the model whereas the second value returns the DataViewSchema. Since we don't need the DataViewSchema in our case, we can ignore it using an underscore (_) for that variable.

This type also creates a member method called PredictSalary. This is where we call the predictionEngine.Predict method and give it an instance of SalaryInput. Because F# is really good at inferring types, we can just give it the YearsExperience property and it knows that it is the SalaryInput type. We do need to supply the Salary property as well, but we can just set that to 0.0. Then, we return the predicted salary from this method. In F# we don't need to specify the return keyword. It automatically returns if it's the last item in the method.

Updating the Client

With the server updated to do what we need, we can now update the client to use the new information. Everything we need to update will be in the Index.fs file.

There are a few Todo items that it's trying to use here from the Shared project. We'll have to update these to use our new types.

First, we have the Model type. This is the state of our client side information. For the Todo application, it has two properties, Todos and Input. The Input property is the current input in the text box and the Todos property are the currently displayed Todos. So to update this we can change the Todos property to be PredictedSalary to indicate the currently predicted salary from the input of the years of experience. This property would need to be of type string.

type Model =

{ Input: string

PredictedSalary: string }

The next part to update is the Msg type. This represents the different events that can update the state of your application. For todos, that can be adding a new todo or getting all of the todos. For our application we will keep the SetInput message to get the value of our input text box. We will remove the others and add two - PredictSalary and PredictedSalary. The PredictSalary message will initiate the call to the server to get the predicted salary from our model, and the PredictedSalary message will initiate when we got a new salary from the model so we can update our UI.

type Msg =

| SetInput of string

| PredictSalary

| PredictedSalary of string

For the todosApi we simply rename it to predictionApi and change it to use the ISalaryPrediction instead of the ITodosApi.

let predictionApi =

Remoting.createApi()

|> Remoting.withRouteBuilder Route.builder

|> Remoting.buildProxy<ISalaryPrediction>

The init method can be updated to use our updated model. So instead of having an array of Todos we just have a string of PredictedSalary.

let init(): Model * Cmd<Msg> =

let model =

{ Input = ""

PredictedSalary = "" }

model, Cmd.none

Next, we update the update method. This takes in a message and will perform the work depending on what the message is. For the Todos app, if the message comes in as AddTodo it will then call the todosApi.addTodo method to add the todo to the in-memory storage. In our app, we will keep the SetInput message and add two more to match what we added in our Msg type from above. The PredictSalary message will convert the input from a string to a float32 and pass that into the predictionApi.getSalaryPrediction method. The PredictedSalary message will then update our current model with the new salary.

let update (msg: Msg) (model: Model): Model * Cmd<Msg> =

match msg with

| SetInput value ->

{ model with Input = value }, Cmd.none

| PredictSalary ->

let salary = float32 model.Input

let cmd = Cmd.OfAsync.perform predictionApi.getSalaryPrediction salary PredictedSalary

{ model with Input = "" }, cmd

| PredictedSalary newSalary ->

{ model with PredictedSalary = newSalary }, Cmd.none

The last thing to update here is in the containerBox method. This builds up the UI. You may have already noticed that there is no HTML in our solution anywhere. That's because Fable is using React behind the scenes and we are able to write the HTML in F#. We'll keep the majority of the UI so there's only a few items to update. The content is what's currently holding the list of todos in the current app. For our case, however, we want it to show the predicted salary so we'll remove the ordered list and replace it with the below div. This sets a label and, if the model.PredictedSalary is empty it doesn't display anything. But if it isn't empty it does a formatted string containg the predicted salary.

div [ ] [ label [ ] [ if not (System.String.IsNullOrWhiteSpace model.PredictedSalary) then sprintf "Predicted salary: %s" model.PredictedSalary |> str ]]

Next, we just need to update the placeholder in the text box to match what we would like the user to do.

Control.p [ Control.IsExpanded ] [

Input.text [

Input.Value model.Input

Input.Placeholder "How many years of experience?"

Input.OnChange (fun x -> SetInput x.Value |> dispatch) ]

]

And with the button we just need to tell it to dispatch, or fire off a message, to the PredictSalary message.

Button.a [

Button.Color IsPrimary

Button.OnClick (fun _ -> dispatch PredictSalary)

]

With all of those updates we can now run the app again to see how it goes.